And Find Your Golden Needle With Predictive Analytics

I consider myself a Data Whisperer. Why? Because after working with big data for over two decades, I am able to discover meaningful patterns and relationships – the “golden needles” that bring forth a tale to tell.

So, how can you, too, pull the important “needles” out of your structured or unstructured data haystack? How can you sift through information efficiently and accurately while staying on budget and saving time?

The answer is: incorporate predictive analytics into your eDiscovery workflow!

When the analytical tools Predictive Coding, Conceptual Analytics, Email Redundancy and Relationships and Near-Duplicate Detection are implemented and Combined with the techniques of Keyword Searching and Quality Assurance, your team will gain a thorough and comprehensive insight to your data. Here is an overview that will greatly impact your big data analysis.

Predictive Coding

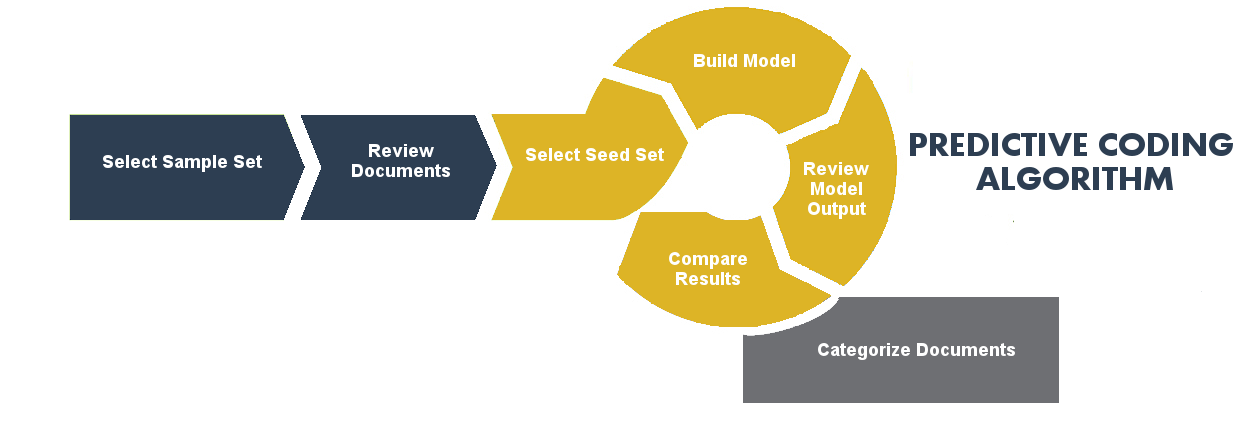

Here is a comprehensive walk through of a version of our predictive coding workflow. Our workflow should not be taken as being the only approach to predictive coding since these tools can differ.

The first step of the Assisted Review process is to create a sample (Sample View) of the overall document population being reviewed. A statistically sound number of documents is drawn at random from the overall document population to be reviewed. The Sample View will serve as the control set against which the model’s predictions (generated later in the process) will be measured to validate their accuracy and establish statistically sound performance metrics.

2. Review Documents

After the Sample View is created, expert reviewers review the sampled documents and categorize them into two mutually exclusive categories, responsive and non-responsive.

Following the review and coding of the Sample View, reviewers will be given “seed” documents to manually designate, most commonly as relevant or non-relevant. This set is a predominantly random sample of documents from the Target population. The Seed Set is used as the foundation for the Assisted Review training process. After the Seed Set is built, the review team codes the documents using two diametrically opposed tags (most commonly relevant and non-relevant).

4. Build Model

Once the review of the Seed Set is complete, the underlying algorithm builds a model capable of replicating those assessments for the unreviewed documents. Its predictions are then validated against the reserved Sample Set of control documents to generate performance metrics.

5. Review Model Output

During each iteration, the results of the model are tested against the previously coded Sample View. The model’s performance is based on how accurately it assigned predictions compared to the relevancy calls of sample documents.

In this step, machine review (or model output) is stacked up against human review. If the model’s performance does not meet expected levels, it can be retrained iteratively until it is considered stable.

Finally, when the model is believed to be stable and assessments match with the Sample View, input data is grouped into categories as per the model’s predictions.

Conceptual Analytics

Conceptual analytics is the process of organizing data by grouping, or clustering, documents the system perceives as having the same meaning. The algorithm at the core of the process examines the text throughout the dataset to find similar keywords and creates a lexicon along with synonyms of the keywords. Once like keywords are identified, documents are placed in a cluster with other documents that are alike. Keywords are displayed to show the reviewer the concepts for the cluster. Including conceptual analysis in your workflow brings several benefits:

- Improved Accuracy In Document Review & Processed Data

- Clusters Offer Clear Organization For End Users

- Quality Control Methods Automatically Incorporated

- Minimizes The Need For Hands-On Human Review

Email Redundancy and Relationships

In 2015, The Radicati Group, a technology market research firm, noted that 205.6 billion emails were sent worldwide per day in 2015, which works out to over 75 trillion emails annually. Management of email threads in your litigation cases is crucial to avoid the loss of time, money and details from communications. Our platform offers easier management with the Email Relationship and Email Redundancy Algorithms.

Email Relationship Algorithm is extremely helpful in reviewing long threads between multiple custodians. This tool displays relationships between senders, recipients, domains and custodians. Users can view dates and total number of communications between correspondents; domains are shown to check for custodians who may be using different domains or aliases; and high volume communications are visually displayed by date.

The Email Redundancy Algorithm searches through the content of each message to make sure any previous versions of the same thread are found within subsequent threads. This tool shows gaps in an email thread and groups threads spanning across multiple custodians. All emails in a thread can be tagged at one time to reduce the margin of human error of potentially inconsistently coding emails.

Near-Duplicate Detection

The near-duplicate algorithm searches through documents to find similar words and meanings and groups like-documents together. The benefits of implementing near-duplicate detection are reducing the amount of time spent on document review and providing quality assurance in reviewing documents with similar context.

Keyword Searching

Some believe that keyword searching lived a long good life and should be put to rest.

I do not agree.

I do believe relying on keyword searching alone is not effective, especially with large datasets. There will be many patterns and relationships found within a dataset and a mere keyword search will return a lot of false positives and possibly exclude important related documents. If the agreed protocol between counsels includes the use of search terms, there has to be a familiarity of the case in order to develop the most effective keywords and the methodology should be strategic, deliberate, discussed and tested.

Quality Assurance

As a government eDiscovery quality assurance vendor, we developed a proprietary software program that ran sampling algorithms on their eDiscovery datasets. In applying statistical sampling algorithms, we were able to capture an accurate analysis of the quality of the data. The program would then compare the data against the government’s specifications and return any anomalies found in the random sample. Two examples of how these algorithms can be used when predictive analytics are not used include:

- Verifying the reliability of keyword searches.

- Determining if the number of processing errors found are actually errors.

Case law supporting the use of sampling can be found in McPeek v. Ashcroft (“McPeek II”), 212 F.R.D. 33 (D.D.C. 2003). The court held that after ordering the “sampling” of a large collection of backup tapes, the resulting data supported further discovery of only one of the tapes.

Conclusion

Your odds of finding the needle in the haystack greatly improve when adding Predictive Analytics procedures to your workflow. The eDiscovery analytic processes available to us today are proven cost and time savers. They can provide more early case assessment information than ever before. Whether you are reviewing 5,000 documents or 500,000 documents, you will find use of an analytic tool beneficial in many ways.